Eugene P. W. Ang

A Unified Deep Semantic Expansion Framework for Domain-Generalized Person Re-identification

Oct 11, 2024

Abstract:Supervised Person Re-identification (Person ReID) methods have achieved excellent performance when training and testing within one camera network. However, they usually suffer from considerable performance degradation when applied to different camera systems. In recent years, many Domain Adaptation Person ReID methods have been proposed, achieving impressive performance without requiring labeled data from the target domain. However, these approaches still need the unlabeled data of the target domain during the training process, making them impractical in many real-world scenarios. Our work focuses on the more practical Domain Generalized Person Re-identification (DG-ReID) problem. Given one or more source domains, it aims to learn a generalized model that can be applied to unseen target domains. One promising research direction in DG-ReID is the use of implicit deep semantic feature expansion, and our previous method, Domain Embedding Expansion (DEX), is one such example that achieves powerful results in DG-ReID. However, in this work we show that DEX and other similar implicit deep semantic feature expansion methods, due to limitations in their proposed loss function, fail to reach their full potential on large evaluation benchmarks as they have a tendency to saturate too early. Leveraging on this analysis, we propose Unified Deep Semantic Expansion, our novel framework that unifies implicit and explicit semantic feature expansion techniques in a single framework to mitigate this early over-fitting and achieve a new state-of-the-art (SOTA) in all DG-ReID benchmarks. Further, we apply our method on more general image retrieval tasks, also surpassing the current SOTA in all of these benchmarks by wide margins.

Diverse Deep Feature Ensemble Learning for Omni-Domain Generalized Person Re-identification

Oct 11, 2024Abstract:Person Re-identification (Person ReID) has progressed to a level where single-domain supervised Person ReID performance has saturated. However, such methods experience a significant drop in performance when trained and tested across different datasets, motivating the development of domain generalization techniques. However, our research reveals that domain generalization methods significantly underperform single-domain supervised methods on single dataset benchmarks. An ideal Person ReID method should be effective regardless of the number of domains involved, and when test domain data is available for training it should perform as well as state-of-the-art (SOTA) fully supervised methods. This is a paradigm that we call Omni-Domain Generalization Person ReID (ODG-ReID). We propose a way to achieve ODG-ReID by creating deep feature diversity with self-ensembles. Our method, Diverse Deep Feature Ensemble Learning (D2FEL), deploys unique instance normalization patterns that generate multiple diverse views and recombines these views into a compact encoding. To the best of our knowledge, our work is one of few to consider omni-domain generalization in Person ReID, and we advance the study of applying feature ensembles in Person ReID. D2FEL significantly improves and matches the SOTA performance for major domain generalization and single-domain supervised benchmarks.

Aligned Divergent Pathways for Omni-Domain Generalized Person Re-Identification

Oct 11, 2024Abstract:Person Re-identification (Person ReID) has advanced significantly in fully supervised and domain generalized Person R e ID. However, methods developed for one task domain transfer poorly to the other. An ideal Person ReID method should be effective regardless of the number of domains involved in training or testing. Furthermore, given training data from the target domain, it should perform at least as well as state-of-the-art (SOTA) fully supervised Person ReID methods. We call this paradigm Omni-Domain Generalization Person ReID, referred to as ODG-ReID, and propose a way to achieve this by expanding compatible backbone architectures into multiple diverse pathways. Our method, Aligned Divergent Pathways (ADP), first converts a base architecture into a multi-branch structure by copying the tail of the original backbone. We design our module Dynamic Max-Deviance Adaptive Instance Normalization (DyMAIN) that encourages learning of generalized features that are robust to omni-domain directions and apply DyMAIN to the branches of ADP. Our proposed Phased Mixture-of-Cosines (PMoC) coordinates a mix of stable and turbulent learning rate schedules among branches for further diversified learning. Finally, we realign the feature space between branches with our proposed Dimensional Consistency Metric Loss (DCML). ADP outperforms the state-of-the-art (SOTA) results for multi-source domain generalization and supervised ReID within the same domain. Furthermore, our method demonstrates improvement on a wide range of single-source domain generalization benchmarks, achieving Omni-Domain Generalization over Person ReID tasks.

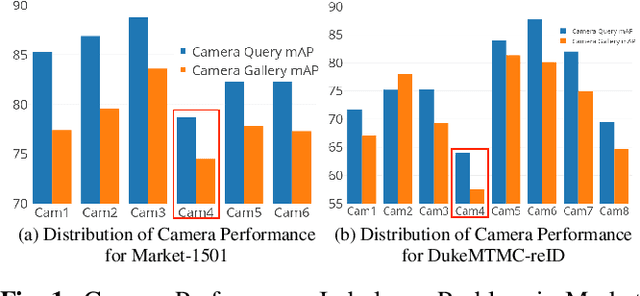

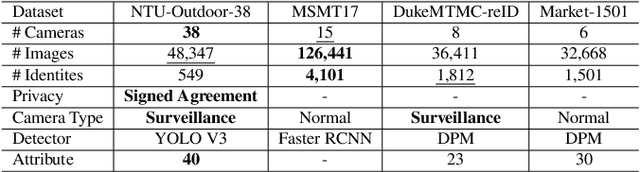

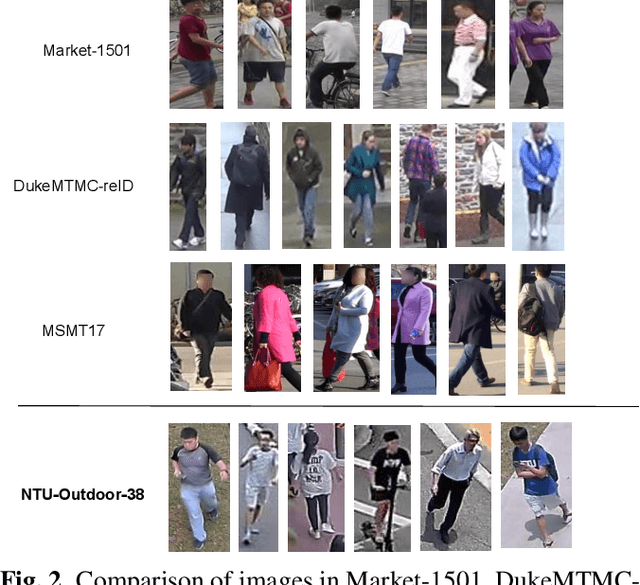

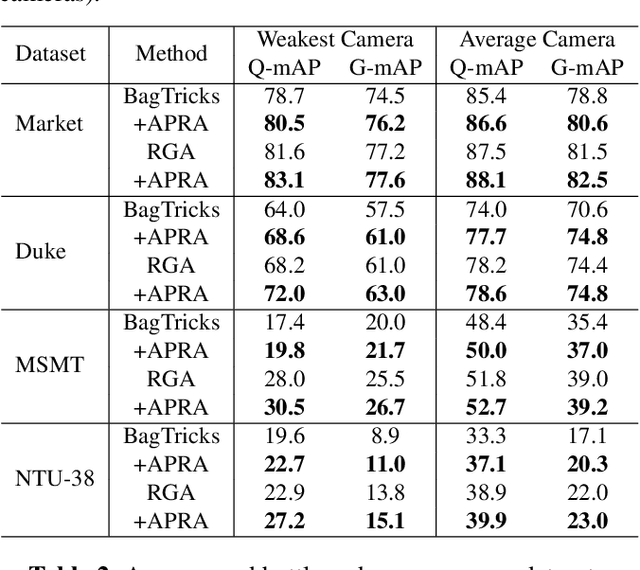

Adversarial Pairwise Reverse Attention for Camera Performance Imbalance in Person Re-identification: New Dataset and Metrics

Jul 04, 2022

Abstract:Existing evaluation metrics for Person Re-Identification (Person ReID) models focus on system-wide performance. However, our studies reveal weaknesses due to the uneven data distributions among cameras and different camera properties that expose the ReID system to exploitation. In this work, we raise the long-ignored ReID problem of camera performance imbalance and collect a real-world privacy-aware dataset from 38 cameras to assist the study of the imbalance issue. We propose new metrics to quantify camera performance imbalance and further propose the Adversarial Pairwise Reverse Attention (APRA) Module to guide the model learning the camera invariant feature with a novel pairwise attention inversion mechanism.

DEX: Domain Embedding Expansion for Generalized Person Re-identification

Oct 21, 2021

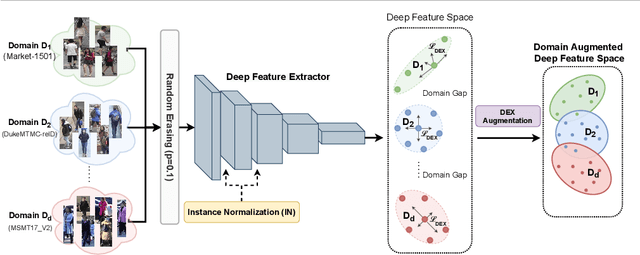

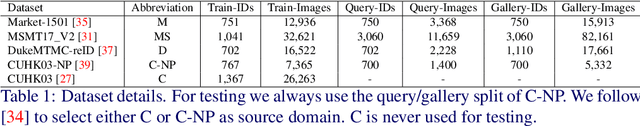

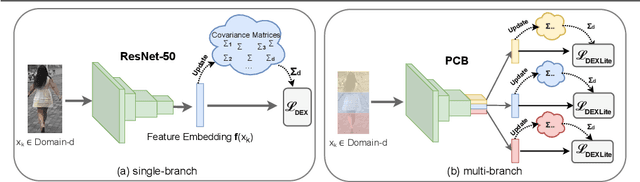

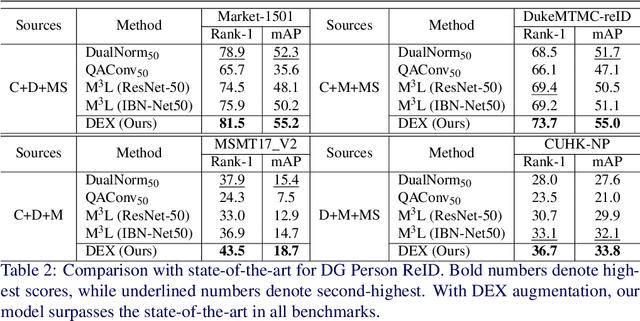

Abstract:In recent years, supervised Person Re-identification (Person ReID) approaches have demonstrated excellent performance. However, when these methods are applied to inputs from a different camera network, they typically suffer from significant performance degradation. Different from most domain adaptation (DA) approaches addressing this issue, we focus on developing a domain generalization (DG) Person ReID model that can be deployed without additional fine-tuning or adaptation. In this paper, we propose the Domain Embedding Expansion (DEX) module. DEX dynamically manipulates and augments deep features based on person and domain labels during training, significantly improving the generalization capability and robustness of Person ReID models to unseen domains. We also developed a light version of DEX (DEXLite), applying negative sampling techniques to scale to larger datasets and reduce memory usage for multi-branch networks. Our proposed DEX and DEXLite can be combined with many existing methods, Bag-of-Tricks (BagTricks), the Multi-Granularity Network (MGN), and Part-Based Convolutional Baseline (PCB), in a plug-and-play manner. With DEX and DEXLite, existing methods can gain significant improvements when tested on other unseen datasets, thereby demonstrating the general applicability of our method. Our solution outperforms the state-of-the-art DG Person ReID methods in all large-scale benchmarks as well as in most the small-scale benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge